How depth information is gathered

The iPhone 7 Plus is the first iPhone to offer two camera modules: a standard wide-angle, plus a telephoto lens. In addition to two unique angles of view, the iPhone 7 Plus introduced users to Portrait Mode, which used computational photography to create a faux shallow depth-of-field effect — where the subject is in focus and the background is blurry.

Portrait Mode looks at the differences between the two images captured by both cameras and uses that information to determine the depth within the photograph — much in the way your two eyes help you determine depth in the real world. Essentially, with some AI assistance, the iPhone can tell which objects are in the foreground and which are in the background. A selective blur can then be applied to areas of the frame, and the amount of blur can even decrease with distance for a more realistic effect. Combined with facial recognition, the mode is especially useful for portraits — hence the name.

However, until iOS 11, Portrait Mode was only available through the built-in camera app, and users had no control over the strength of the effect. With the new depth APIs (application programming interfaces) in iOS 11, third-party developers now have the opportunity to take advantage of the same computational photography used in Portrait Mode.

Seeing is believing

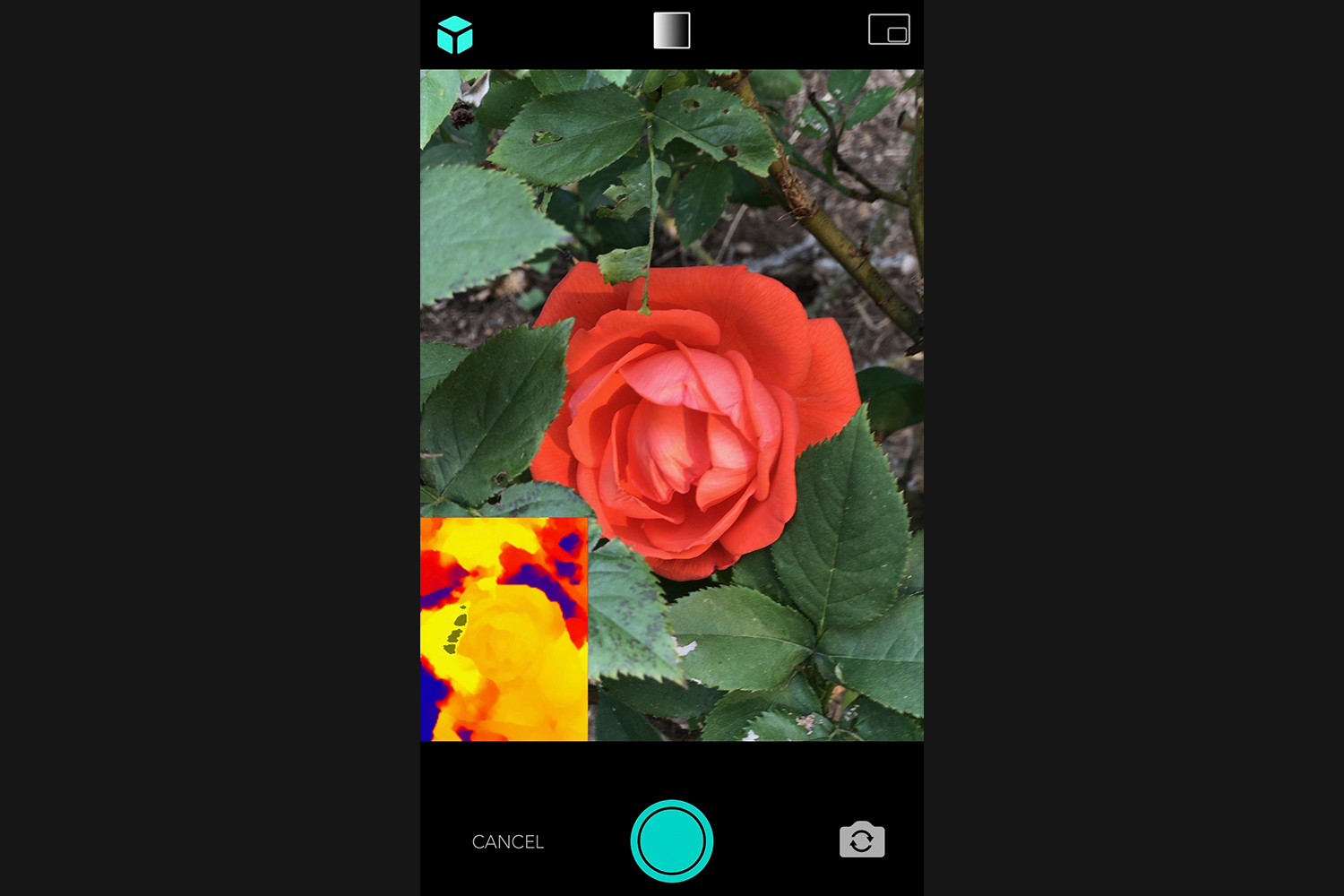

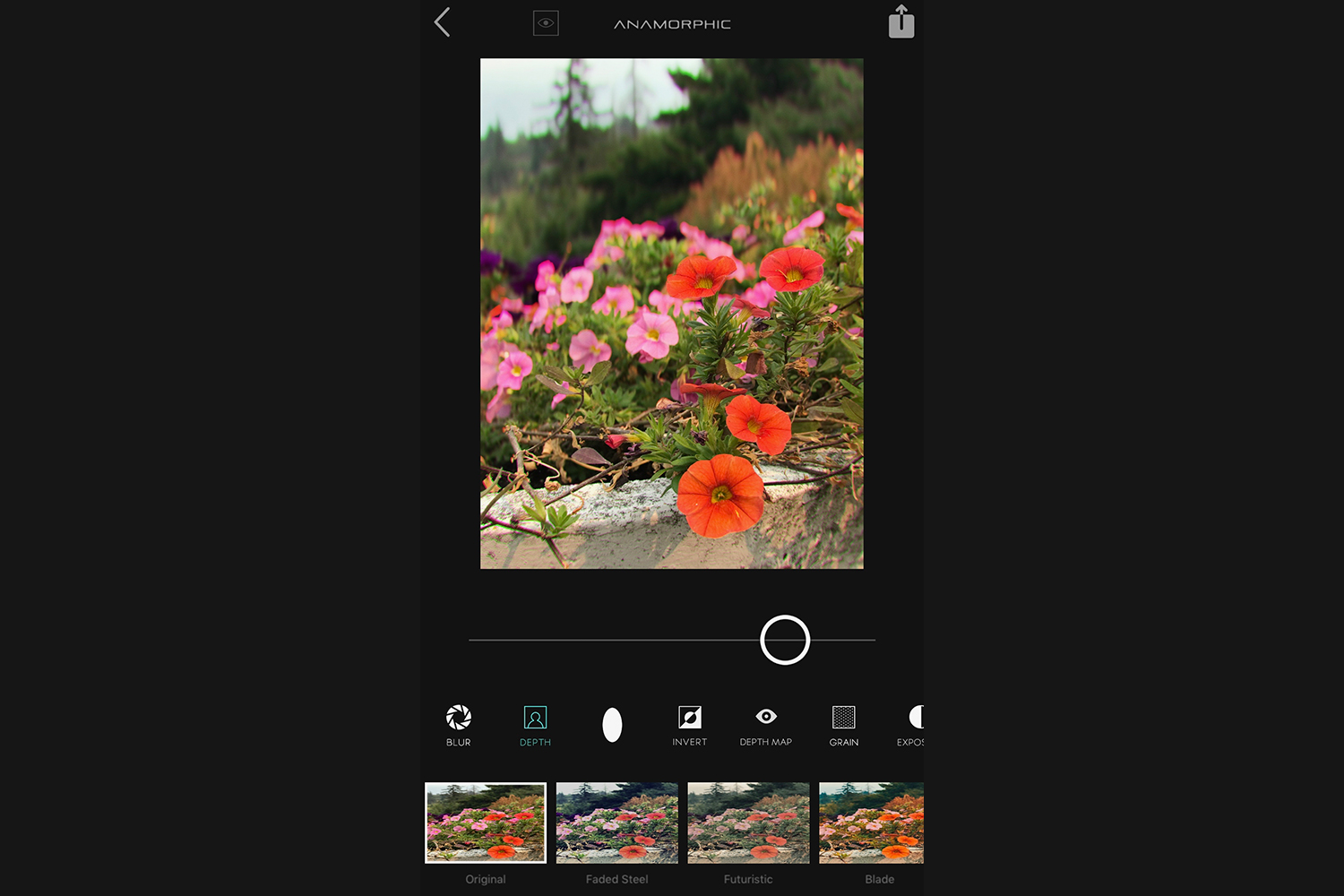

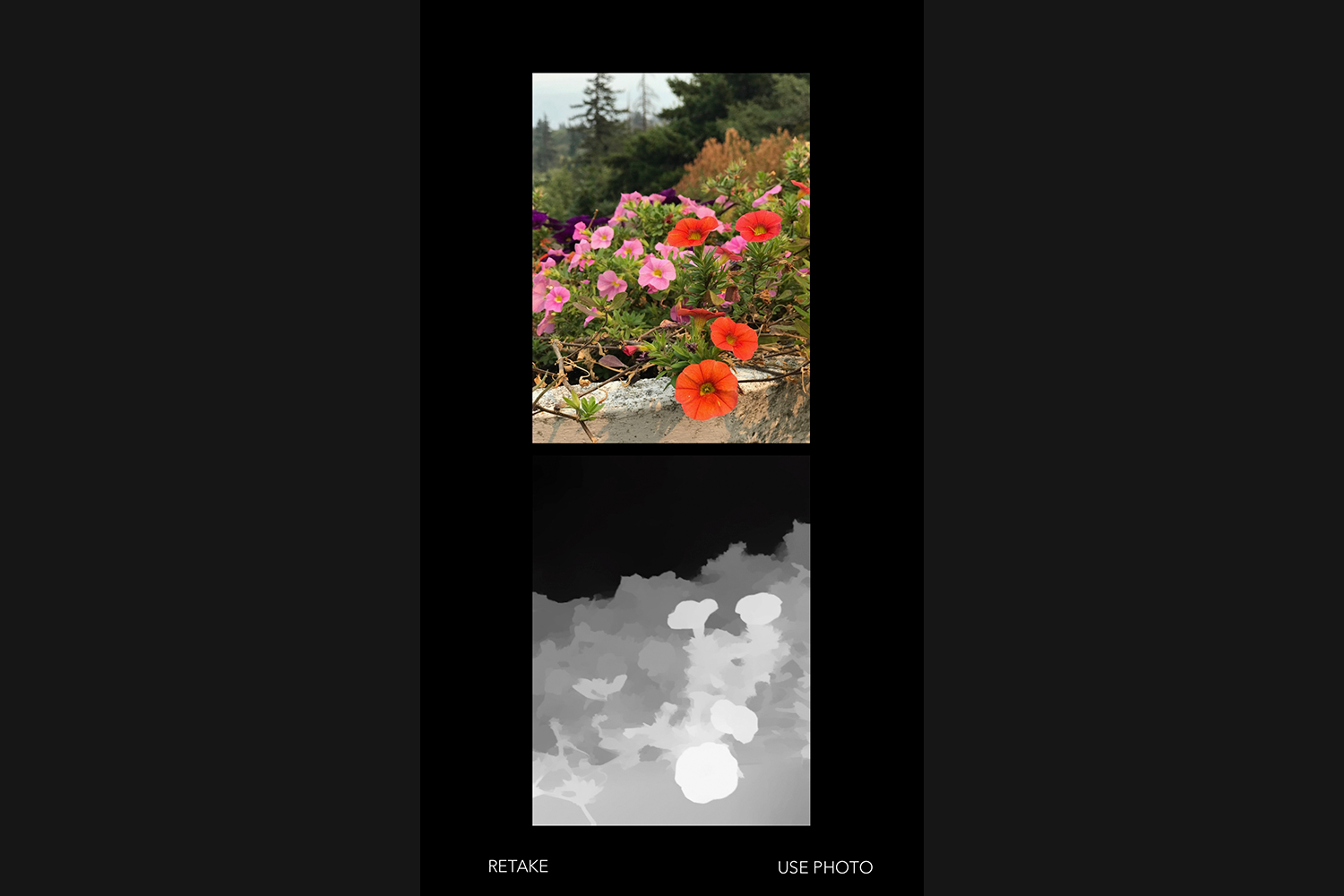

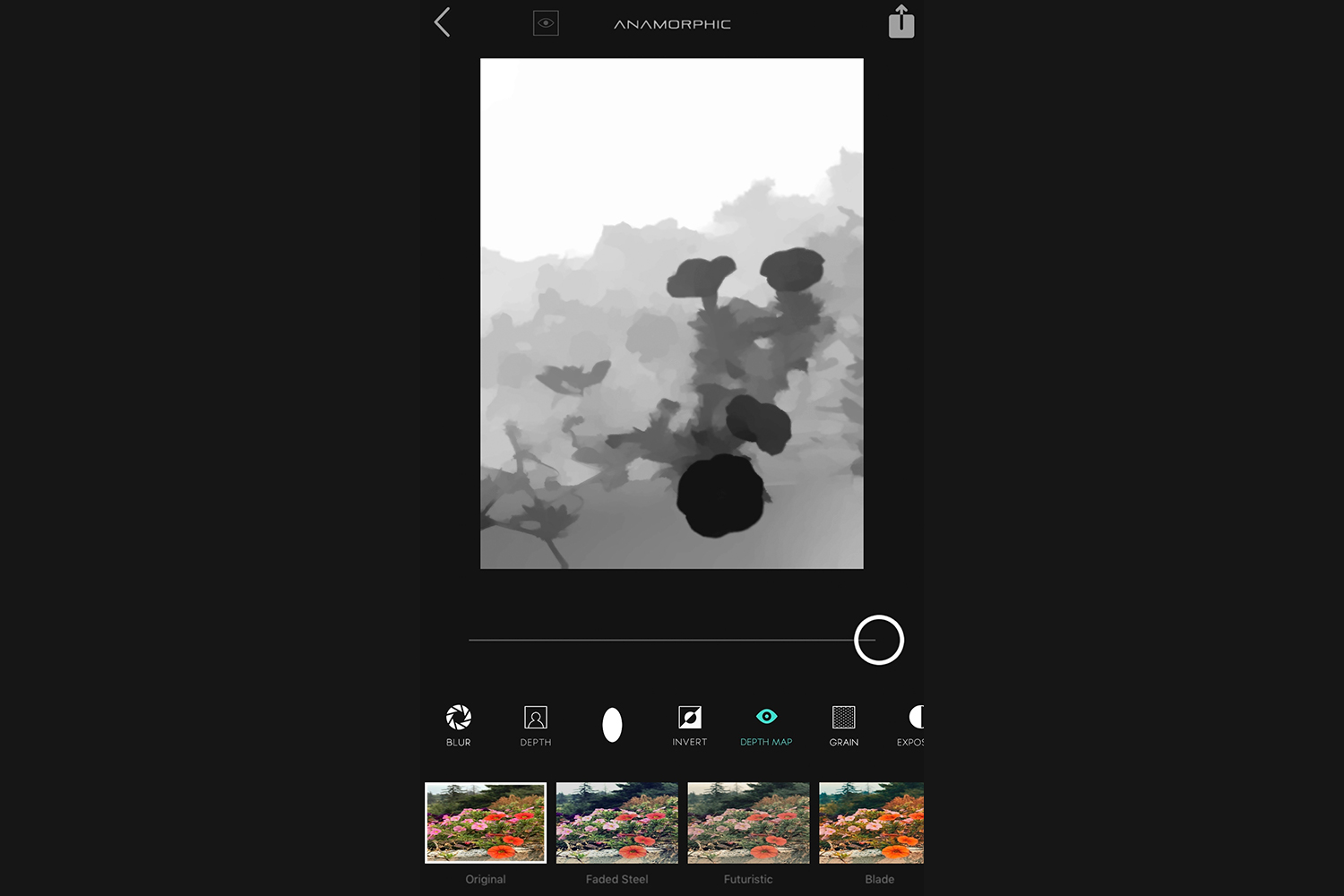

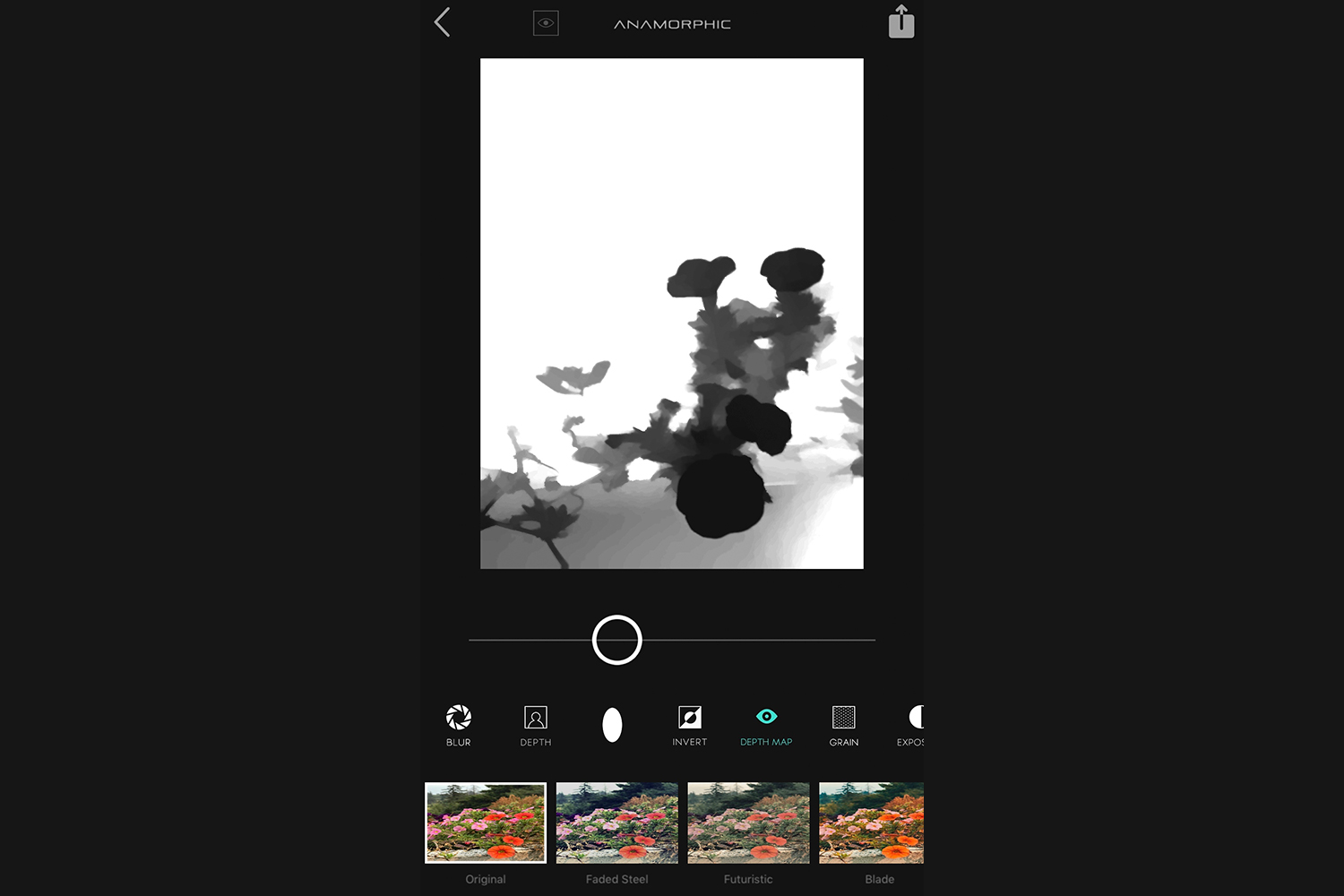

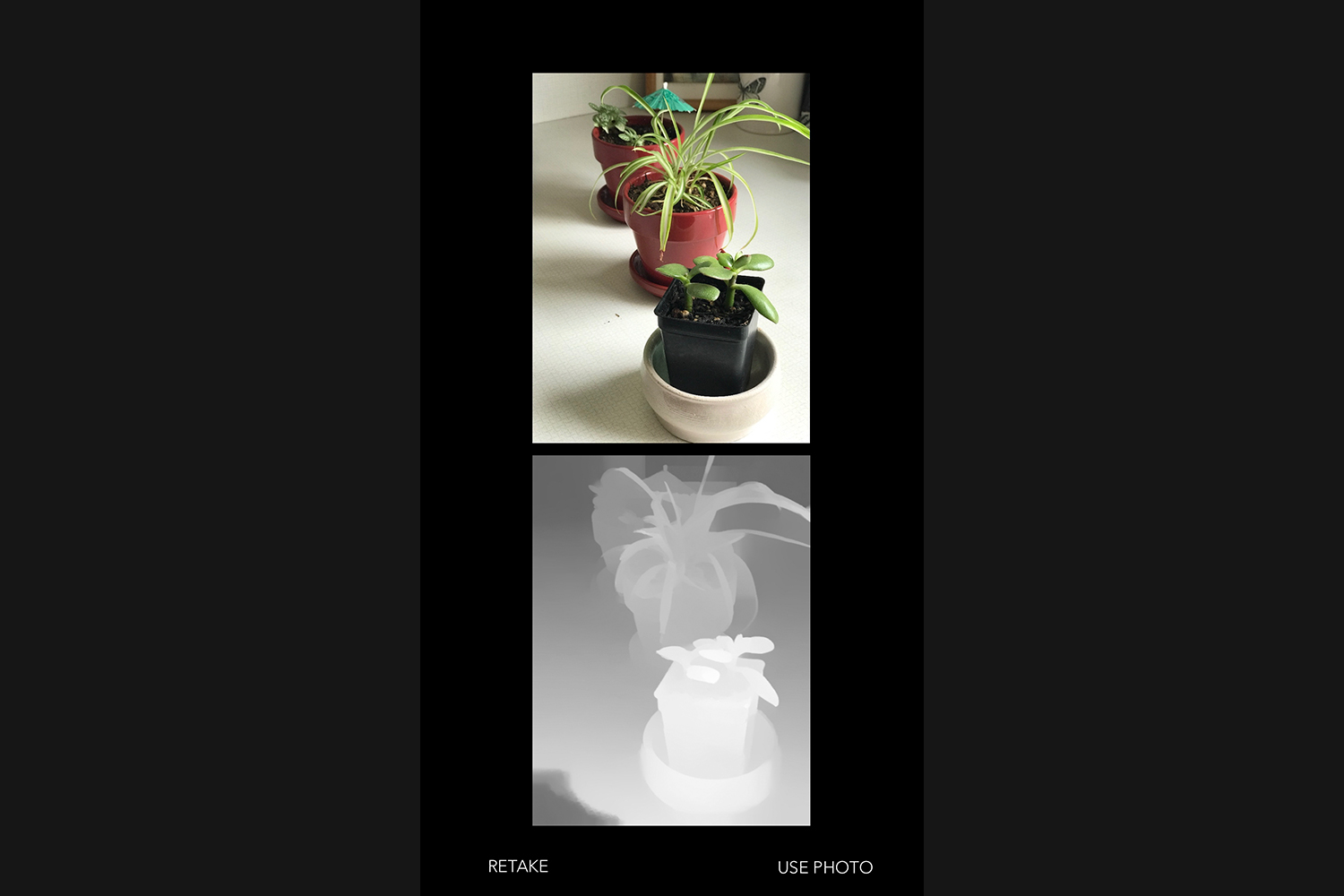

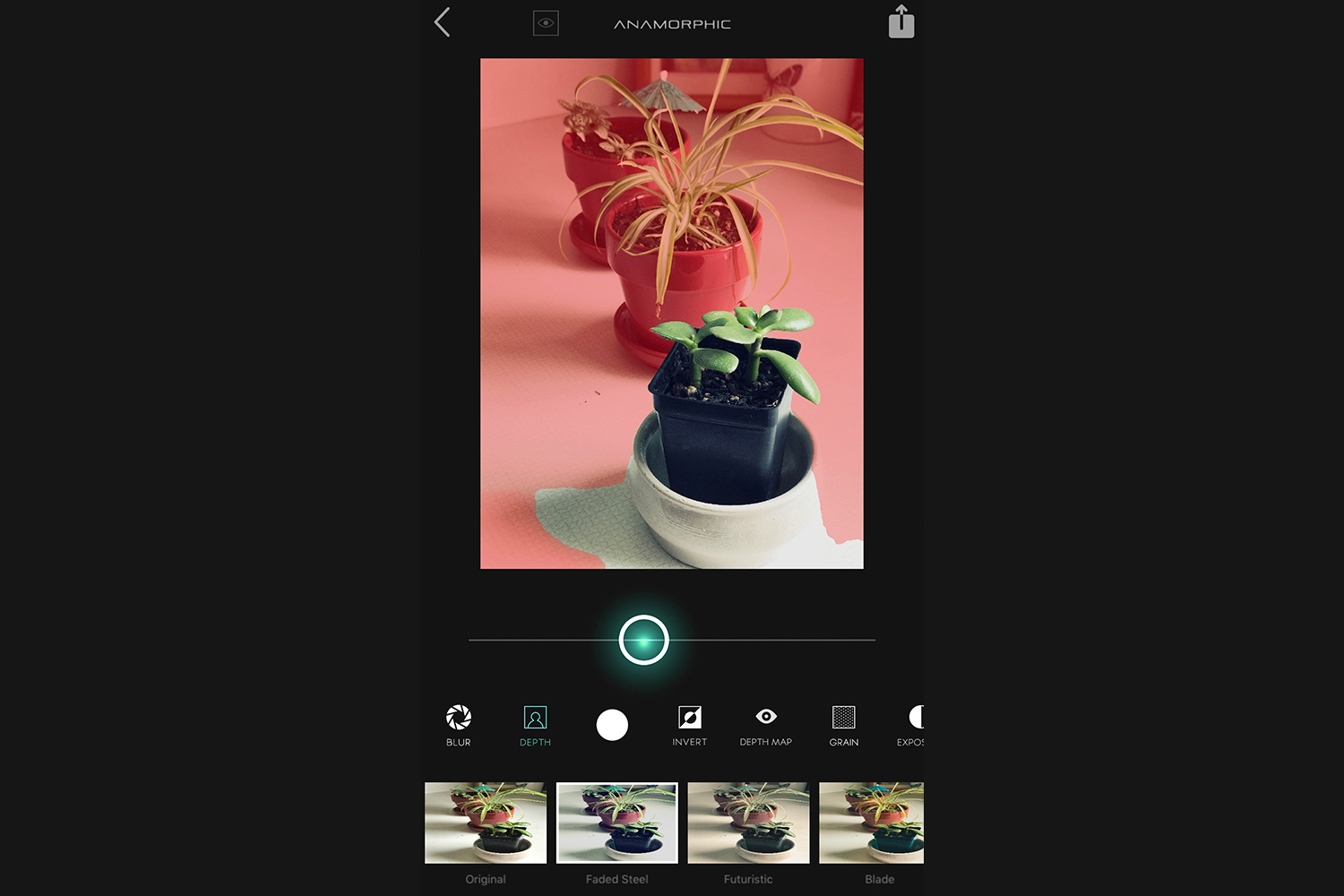

From a purely technical perspective, Anamorphic offers a glimpse behind the curtain of how Portrait Mode works by actually displaying a live depth map next to the camera preview image. This lets you see exactly what the iPhone is seeing in terms of depth, and for those of us on the nerdier side, it’s a welcome bit of information.

Anamorphic offers a glimpse behind the curtain of how Apple’s Portrait Mode works.

For anyone just out to take pretty pictures, the visualization of the depth map may not matter as much, but it can still provide useful information. For one, as good as the iPhone 7 Plus is in determining depth, it is not perfect. By seeing the actual depth map, you can locate errors before you take the picture. Sometimes, just adjusting your distance or angle to the subject can help clean things up a bit.

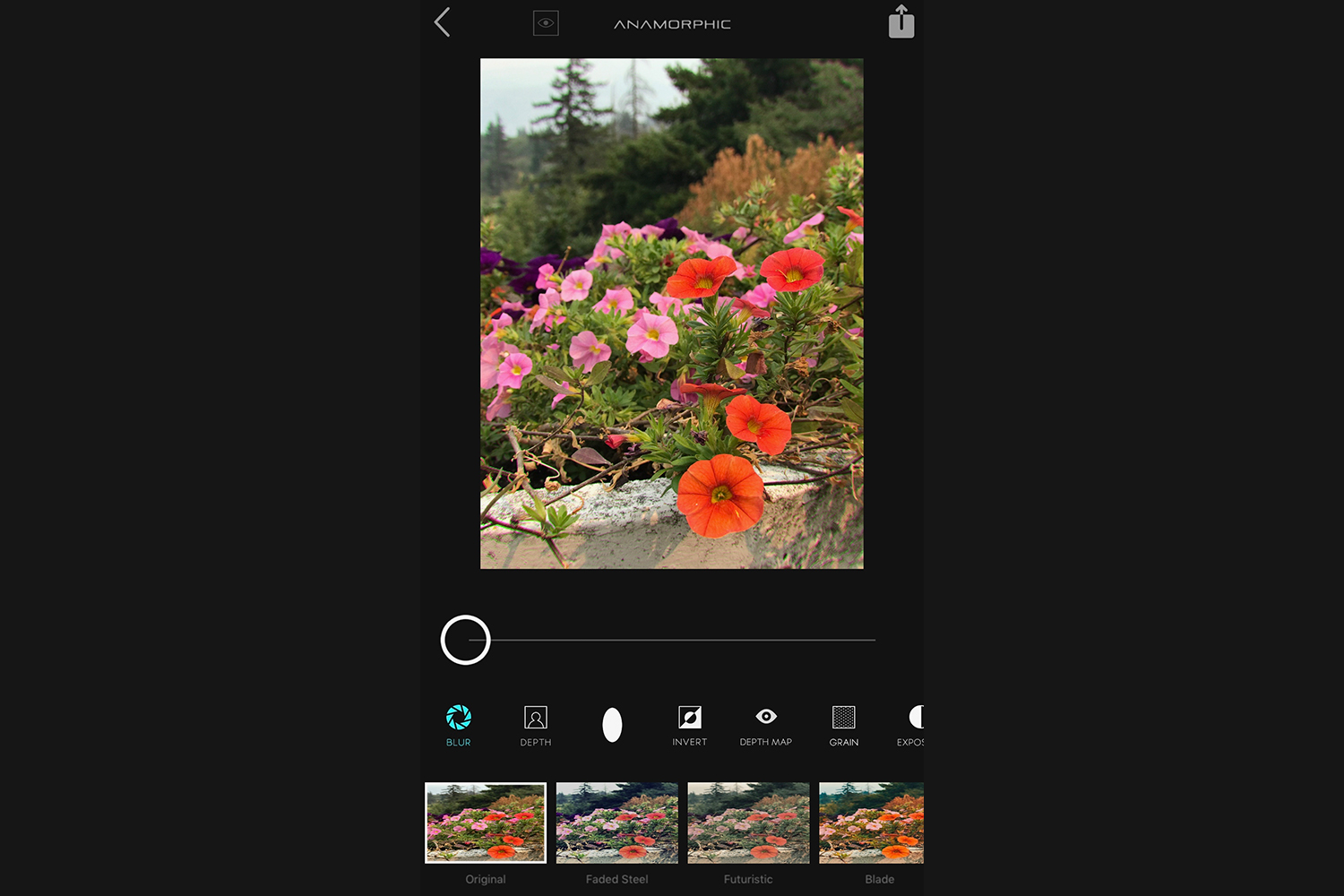

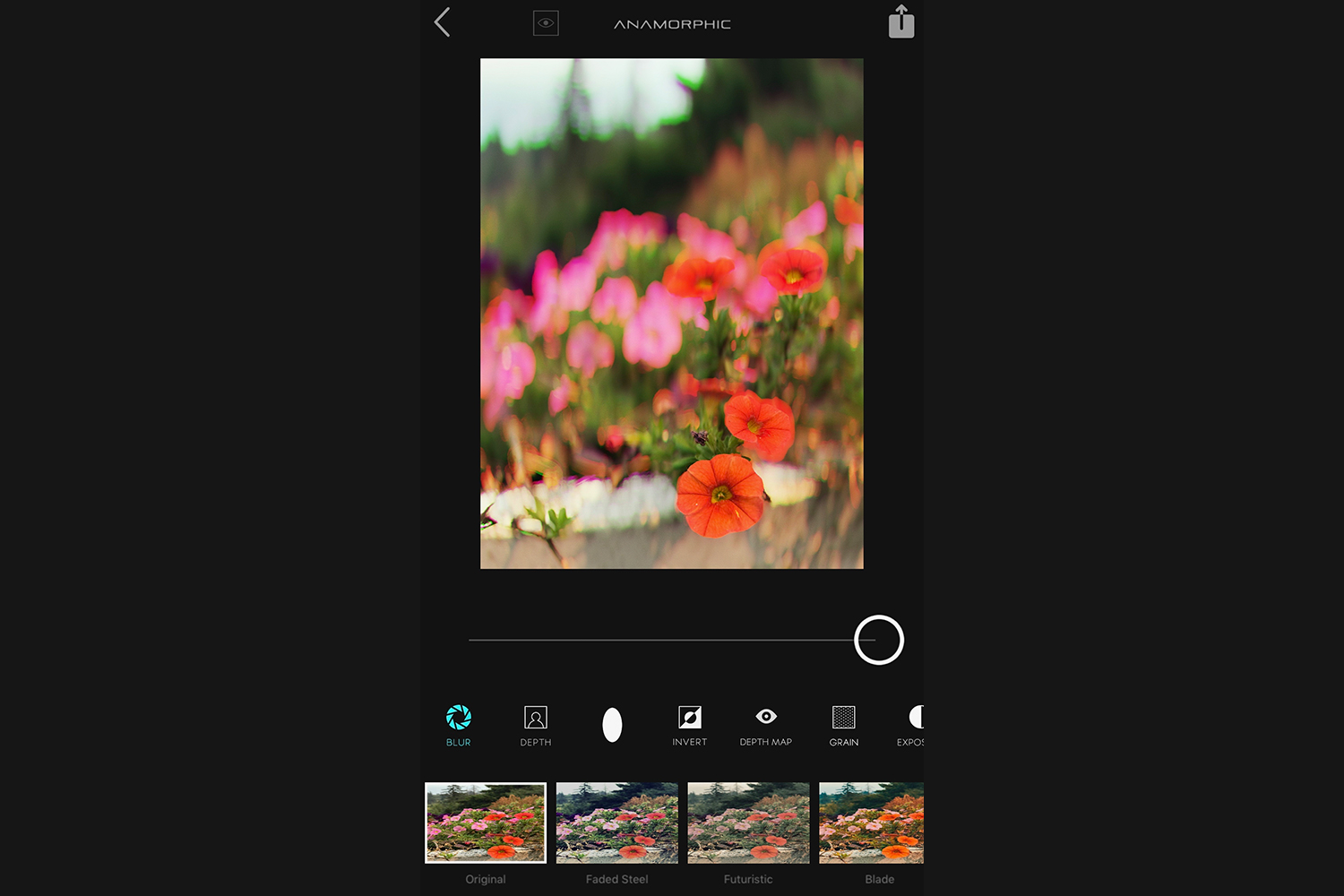

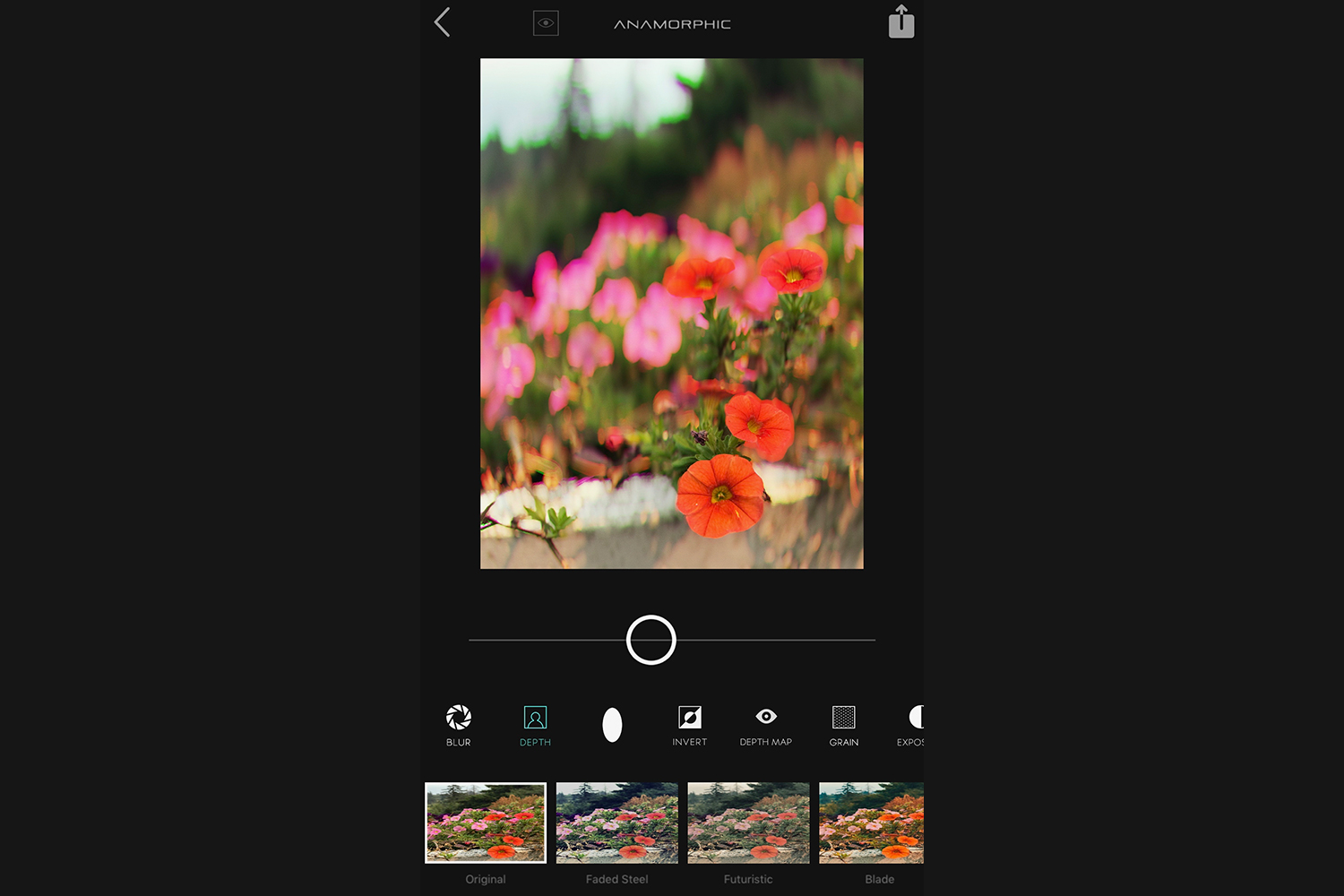

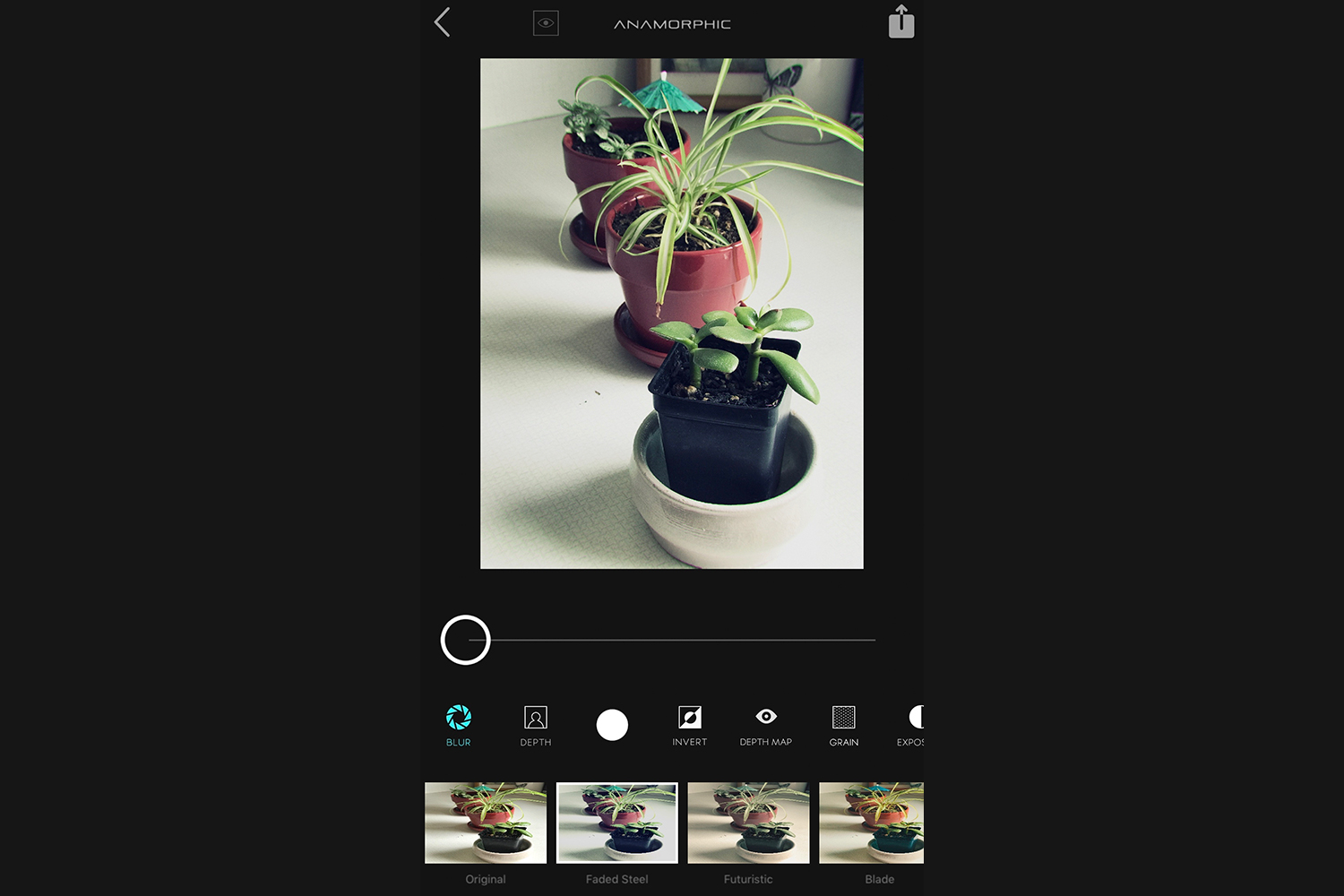

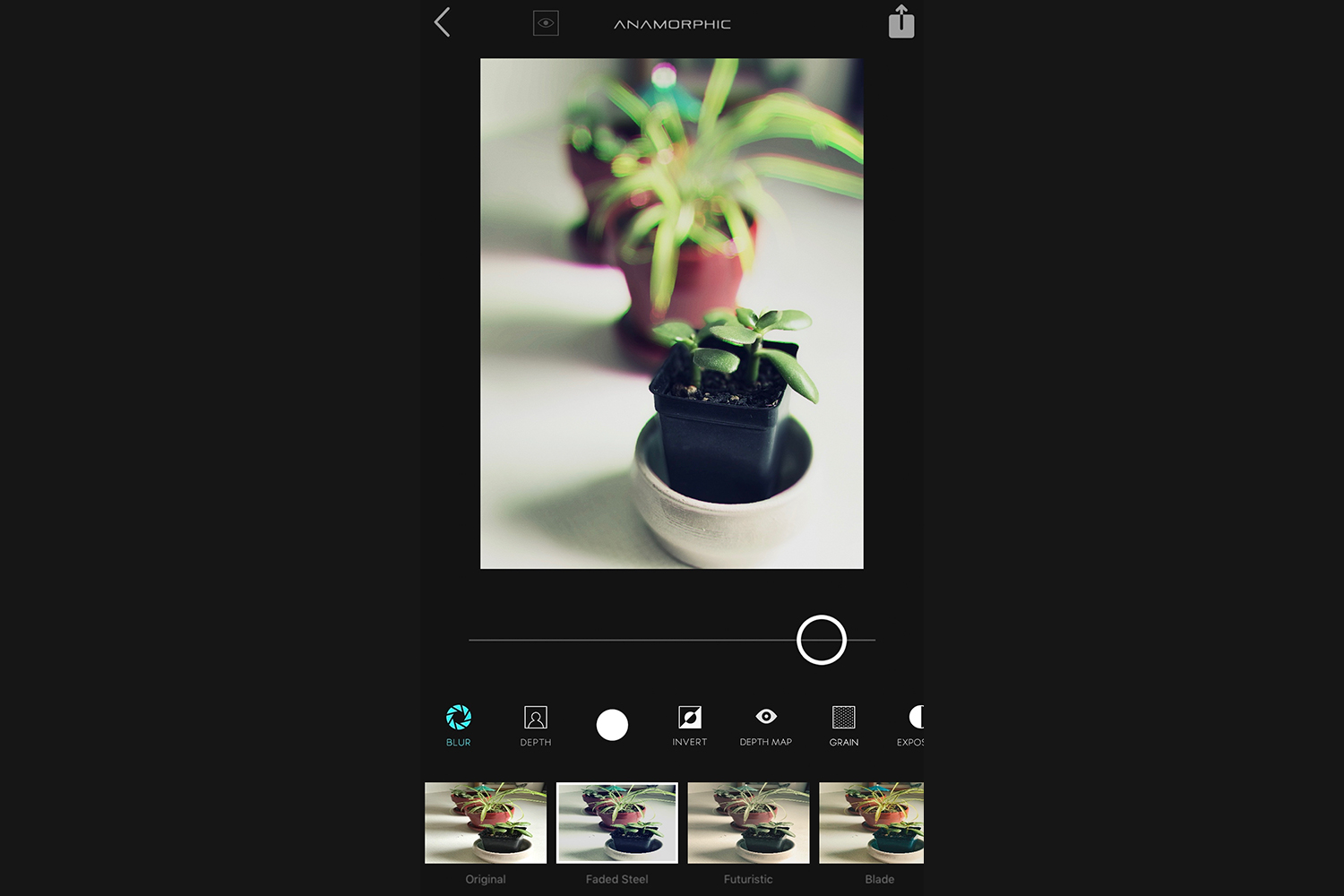

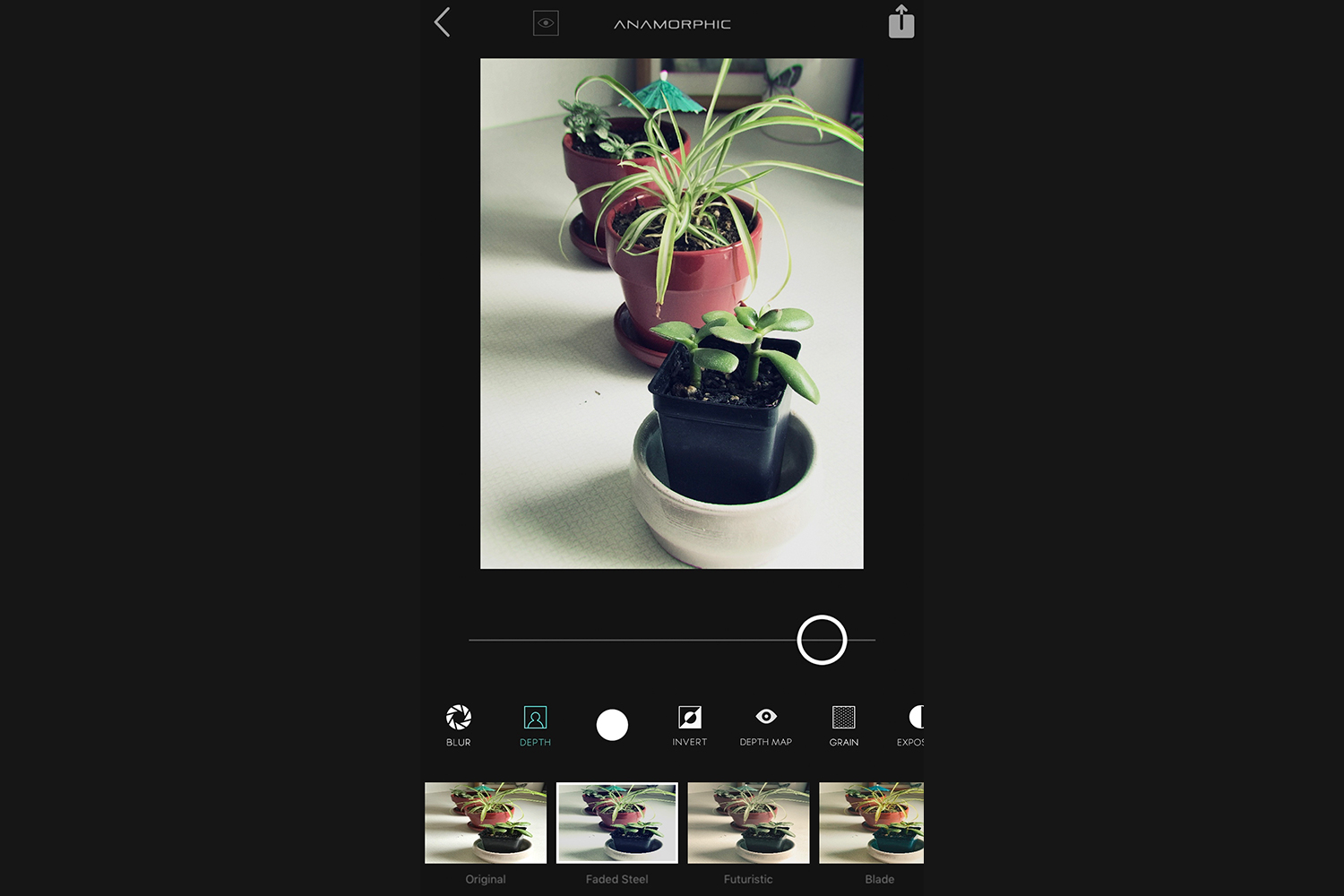

But the depth map also comes into play after the fact. Once a photo is taken, you can actually adjust the depth map within Anamorphic, effectively shortening the available depth and determining where the blur will begin to set in. You can also control the amount of blur itself, akin to adjusting the depth of field by opening or closing the aperture on a DSLR or mirrorless camera lens.

And, true to its name, the app even gives an option for the style of blur: Regular or anamorphic, the latter being an imitation of anamorphic cinema lenses. All of this provides much more control than the built-in Portrait Mode (which is a simple, binary decision of “on” or “off”).

In addition to interacting with the depth data, Anamorphic offers a number of Instagram-esque filters as well as some basic editing options that let you adjust exposure or add film grain or a vignette.

Don’t throw away your DSLR yet

For as much as Anamorphic offers, it also makes clear the iPhone’s shortcomings. Basic, two-lens computational photography has some advantages over traditional cameras, such as the ability to adjust the amount of blur after the shot. However, there are still many limitations.

Portrait Mode users are undoubtedly familiar with the “Place subject within 8 feet” warning that displays when the camera is too far from the subject for Portrait Mode to work correctly. This is a result of the two camera modules being so close to each other, which means after a certain distance (8 feet, apparently) there is no longer a significant enough difference between the two images to determine depth.

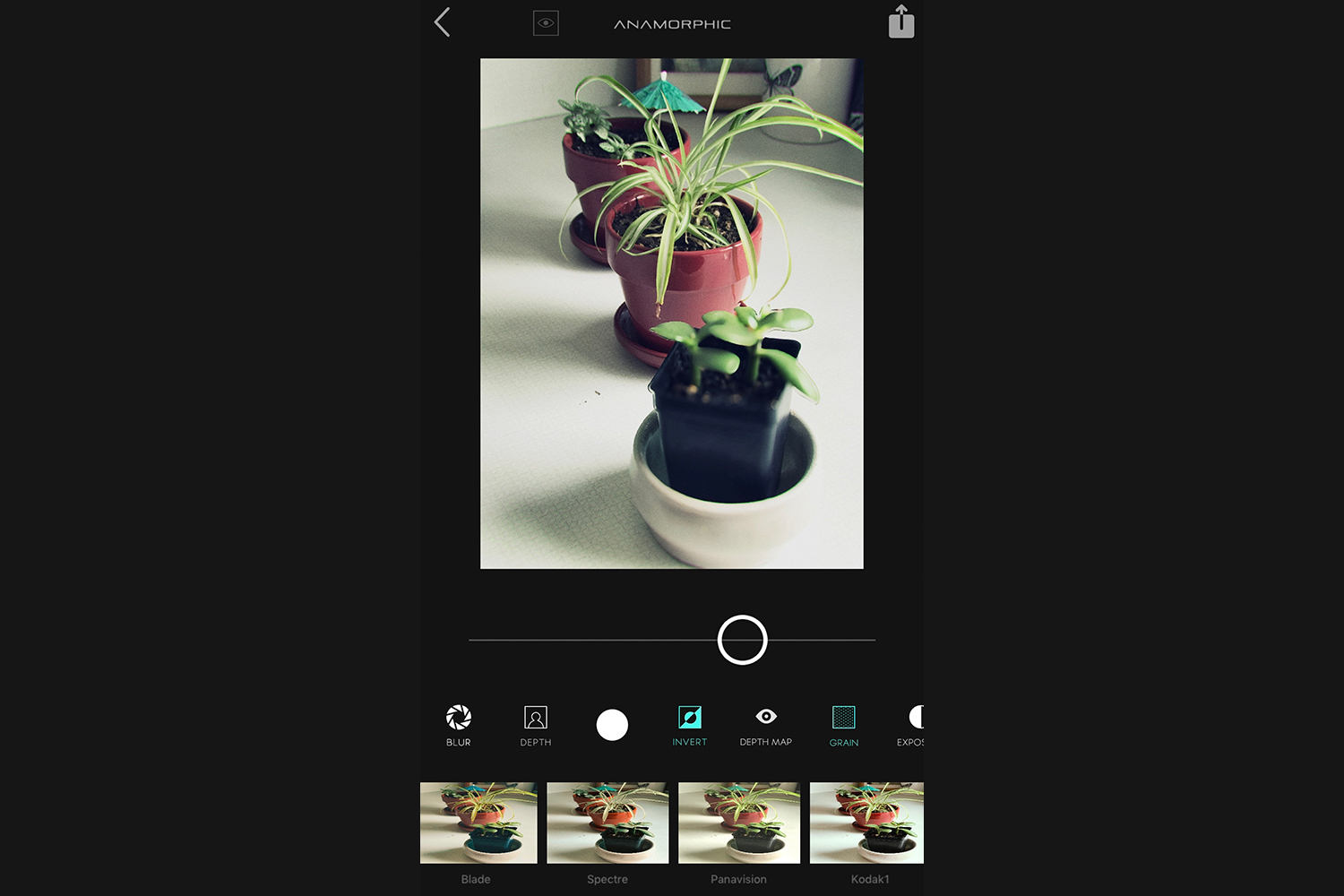

Also, while adjusting the depth map and blur after the shot is a novel feature, this is not the same as refocusing an image. Anamorphic does have the option to invert the depth map (blurring the foreground instead of the background) but this only goes so far. The iPhone lenses natively have a very deep depth of field (meaning, most of the depth of an image is in focus), but it is not infinite. If you focused on something close to the lens, you won’t be able to dramatically change the image to make it look like you focused on the background.

Anamorphic also takes the artistic liberty of adding chromatic aberration (purple and green fringing) into the blurred part of the photo, which, at least in its current pre-release form, is not user-controllable. This applies to both images shot with the Anamorphic camera as well as those captured via the built-in camera app and edited in Anamorphic. While the effect is not inherently unattractive, we would like to see an option to toggle it on and off in a future release of the app.

An exciting look at what’s to come

While Anamorphic (and iOS 11, for that matter) is still in development, it’s exciting to see the potential of what it offers. It provides a much more robust version of Apple’s Portrait Mode. Frankly, we feel like Anamorphic’s depth map and blur controls should be part of the default camera experience, although we can also appreciate Apple’s desire for simplicity.

Anamorphic is the first of what will likely be numerous apps taking advantage of the new depth APIs in iOS 11, and while it’s not yet perfect, it is certainly promising. We look forward to trying out a final version after iOS 11 is officially available.