“Ray tracing is here,” Nvidia CEO Jensen Huang optimistically proclaimed in a press interview at GTC. “It’s all about ray tracing, ray tracing, ray tracing.”

Nvidia’s wide support of ray tracing – from desktops to laptops to servers and the cloud – indicates a strong push and demand behind the new technology, despite early reports suggesting that initial adoption for RTX has been slow.

Nvidia confidence might be unshakable, but attacks from its competitors continue to grow in number. AMD has 7nm GPUs, Google is launching a game streaming platform, and even Intel is planning its re-entrance into the graphics game. But from Nvidia’s perspective, it’s only more evidence that it’s headed in the right direction.

The game streaming wars

Bringing RTX to servers will open Nvidia up to more audiences and new possibilities. Designers and creatives can rely on the RTX cloud to render images in real-time and collaborate on projects through the new Nvidia Omniverse platform, while gamers can leverage the power of Nvidia graphics on the GeForce Now game streaming platform, even if their local rigs don’t ship with high-end graphics.

But Nvidia has some new competition in this realm.

While Nvidia was promoting GeForce Now, which is in its second year as beta, Google announced Stadia, a competing game streaming platform at the Games Developer Conference (GDC) just fifty miles north of GTC in San Francisco, California. Though Huang admitted he doesn’t know specifics behind Stadia, which operates off of rival AMD’s custom graphics on Google’s servers, Nvidia is leveraging the familiarity of the GeForce brand in its cloud approach to gaming.

“What we decided to do essentially in a world where gaming is free to play is to build the service for the billion PC customers who don’t have GeForces,” Huang said, alluding to the fact that GeForce Now is targeted at gamers who want enthusiast-level performance but lack the resources to make that happen. “For them, getting access to GeForce in the cloud must be very nice because their PC isn’t strong enough or too old, or the game doesn’t run on Linux or a Mac.”

Driven by its relationships with publishers and the economics of the gaming industry, Nvidia is careful to point out that you must own the title to play, and it’s not creating an all-access subscription service.

“We don’t believe that Netflix for gaming is the right approach,” Huang said. According to Nvidia, the decision to play a game, especially for PUBG titles, is generally driven by what a gamer’s friends are already playing, so a Netflix model used to surface and discover new titles won’t work. “So our strategy is to leave the economics completely to the publishers, to not get in the way of their relationship with the gamers. Our strategy with GeForce Now is to build the servers and host the service on top.”

In its second year as beta, GeForce Now, which can be used to stream more than 500 games, is now home to more than 300,000 gamers with a wait list of a million strong. Nvidia will be upgrading the experience of GeForce Now to enable RTX as early as the second or third quarter this year. “The next build of GeForce Now servers from now on will be RTX, so ray tracing on every single server,” Huang said.

As Nvidia continues to make investments in game streaming, it’s looking to scale the service, drive down costs to be able to allow gamers to access free-to-play titles, and build more data centers at the edge as part of its hybrid cloud approach in order to minimize latency and delays. Through partnerships with telecoms, Nvidia is hoping to build servers in every country in the world.

Threats from AMD and Intel

Nvidia has been making bets on graphics as the way forward for computing since its founding. And with Moore’s Law showing its limitation on the CPU side, Nvidia is confident that solving the world’s most meaningful problems requires a strong GPU. With more data collected today than ever before, the process of making sense of all that information requires a lot of power. “Our GPU has made it possible for computation to be done very quickly,” Huang said.

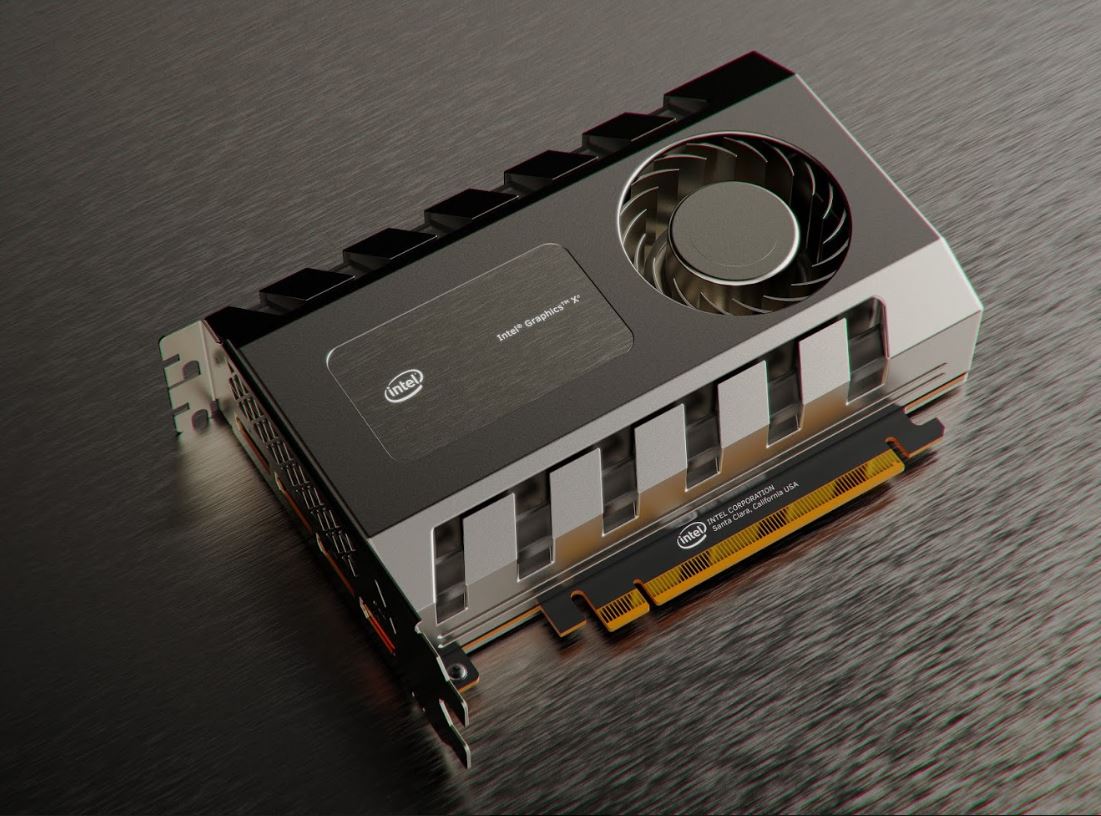

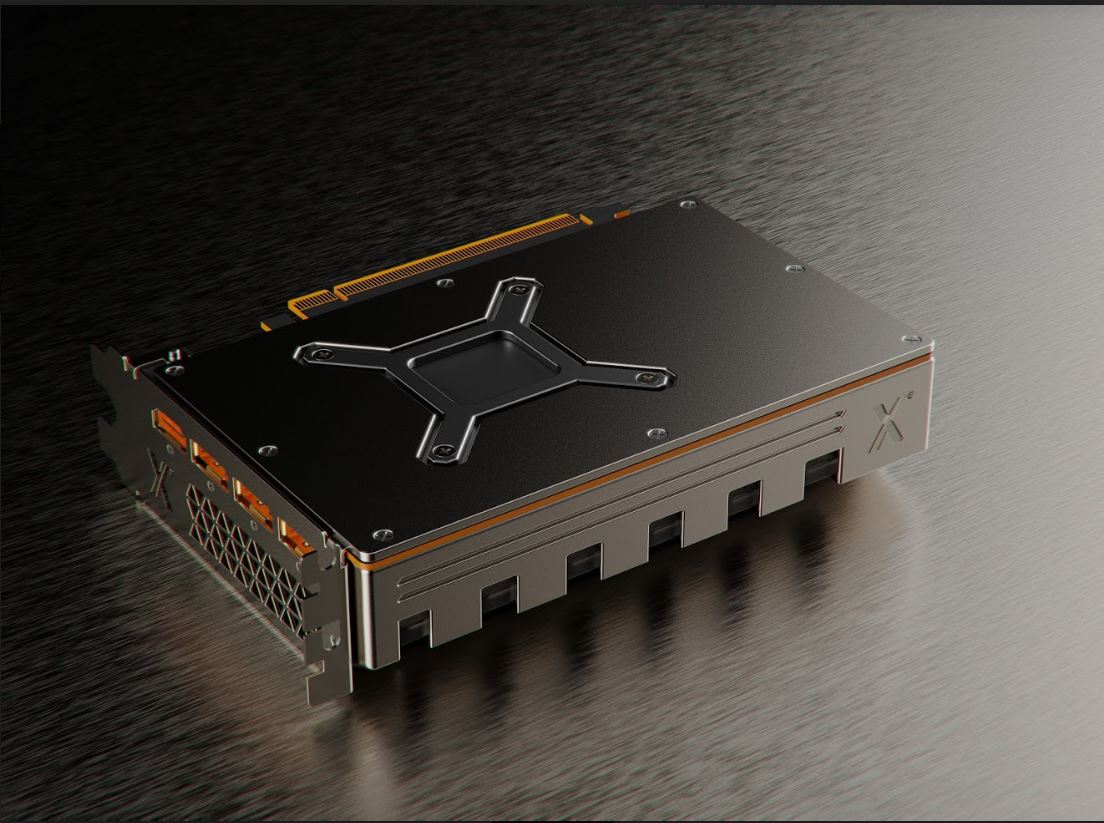

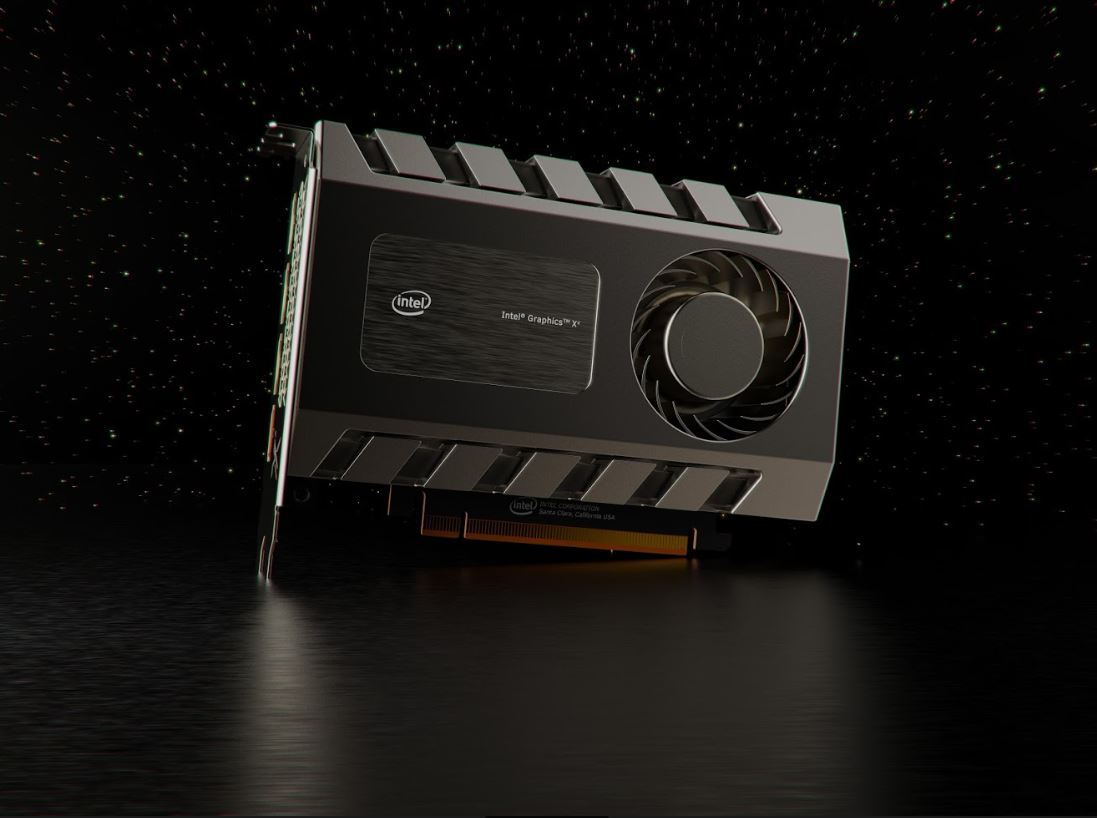

“The CPU is too rare a resource, so you have to offload when you can,” he explained, while welcoming Intel into the graphics market. “Even Intel believes that accelerators are the right path forward, and that’s a good thing. Quantum computing is still decades away, so why don’t we find an approach that is practical, that is here now based on linear computing. So for them to acknowledge that is really fantastic.”

It’s not just newcomers like Intel that is looking to erode Nvidia’s leadership in the graphics market. Rival AMD briefly dominated the conversation in graphics technology earlier this year when it became the first to announce the 7nm architecture for its Radeon Vega VII graphics, boasting a boost in performance and better thermals. In comparison, the Turing architecture that Nvidia introduced for RTX is based on a larger 12nm design. Huang, however, appeared unphased by what AMD is doing.

“7nm process is open for sale,” Huang mocked. “TSMC would love to sell it to us. What is the genius of a company if we just buy somebody else’s wafers? And what is the benefit of your contribution to their wafers?” Nvidia’s genius is its engineering, and for Huang, the results are about performance and energy, not size alone.

Despite utilizing a larger architecture, Huang claimed that the superior Nvidia engineering allows Turing to outperform its rival. “The energy efficiency is so good, even compared to someone else’s 7nm. It is lower cost, it is lower energy, it has higher performance, and it has more features.”

The best technology that made sense at the time for Turing was a 12nm Nvidia-engineered FinFET, and Nvidia had invested considerable time and money to engineer the chip’s architecture with TSMC to deliver the performance that it wanted.

As competition heats up in graphics, game streaming, and data centers, Huang remains optimistic on what Nvidia can deliver “because we have good engineers and the software is excellent.”